前言

NGA论坛刚刚开放了用户IP显示功能,早就想查查泥潭精英充分的我连夜花费数个小时写了个IP爬虫出来,看看都是哪些人在泥潭大漩涡板块活跃

爬虫

首先是配置headers:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| import requests as req

from lxml import etree

import numpy as np

import time

import re

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36 Edg/103.0.1264.62',

'Cookie': '',

'Connection':'close',

}

|

版面页

然后是从网事杂谈板块前几页的爬取到各个帖子的链接(API接口参数可查看文档)

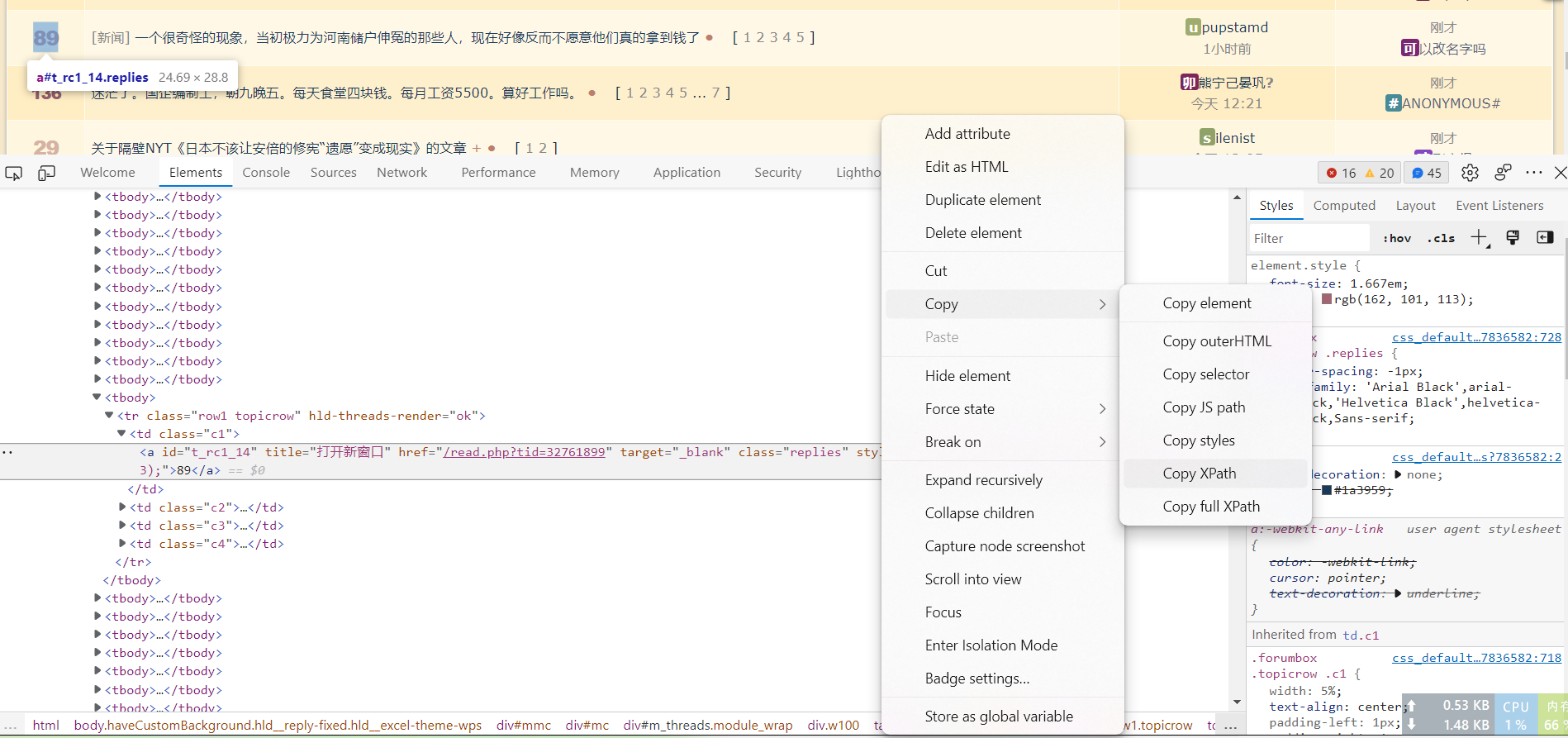

F12查找到对应元素(不准确,需要自行修改)方便抓取链接。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| urls = []

limit = 5

for i in range(1,limit+1):

time.sleep(1)

mainPage = req.get('https://bbs.nga.cn/thread.php?fid=-7&order_by=lastpostdesc&page='+str(i),headers=headers,verify=False)

doc = etree.HTML(mainPage.text)

pages_url = doc.xpath('//td[@class="c1"]/a')

for pg in pages_url:

r = re.search(r'[0-9]+',pg.attrib['href']).group()

urls.append(r)

print('no.'+str(i)+' : '+str(r))

|

之后对抓取到的主题贴进行去重

1

2

3

| urls = set(urls)

urls = list(urls)

print(len(urls))

|

主题页

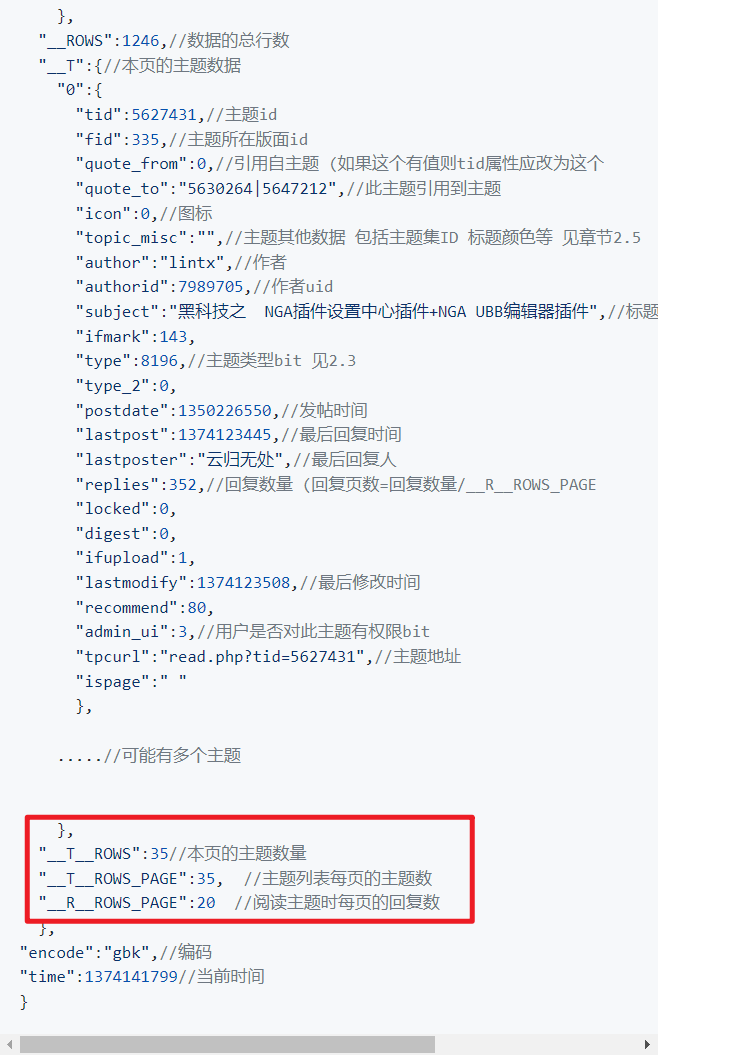

之后获取到主题贴第一页(默认)的内容,找到对应结果计算帖子页数,并获取到每页的用户uid, 用户uid可去重可不去重。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| uid = []

for item in urls:

time.sleep(1)

page_url = 'https://bbs.nga.cn/read.php?tid='+str(item)+'&lite=js'

mainPage = req.get(page_url,headers=headers,verify=False)

txt = str(mainPage.text).replace('window.script_muti_get_var_store=','')

Rows = re.findall(r'"__ROWS"\:[0-9]+',txt)

if Rows:

pass

else:

continue

pageNum = int(int(Rows[0].replace('"__ROWS":',''))/20 + 1)

print(str(item)+" pages: "+str(pageNum))

if pageNum>100:

continue

for i in range(1,pageNum+1):

u = page_url+'&page='+str(i)

mainPage = req.get(u,headers=headers,verify=False)

txt = str(mainPage.text).replace('window.script_muti_get_var_store=','')

tmp = re.findall(r'"uid"\:[0-9]+',txt)

flag = 0

for t in tmp:

if(flag==0):

flag=1

continue

i = t.replace('"uid":','')

uid.append(i)

|

用户IP获取

通过uid查到用户信息,并筛出ipLoc数据:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| url = 'https://bbs.nga.cn/nuke.php?lite=js&__lib=ucp&__act=get&uid='

ips = []

nums = 0

for person in uid:

time.sleep(0.1)

person_page_url = url + person

mainPage = req.get(person_page_url,headers=headers,verify=False)

txt = str(mainPage.text).replace('window.script_muti_get_var_store=','')

tmp = re.findall(r'"ipLoc"\:"[\u4e00-\u9fa5]+',txt)

if tmp:

pass

else:

continue

tmp = tmp[0].replace('"ipLoc":"','')

ips.append(tmp)

print(nums,tmp)

nums = nums+1

with open('.\\area.txt', mode='a',encoding='utf-8') as f:

for i in ips:

f.write(i+'\n')

|

结果处理

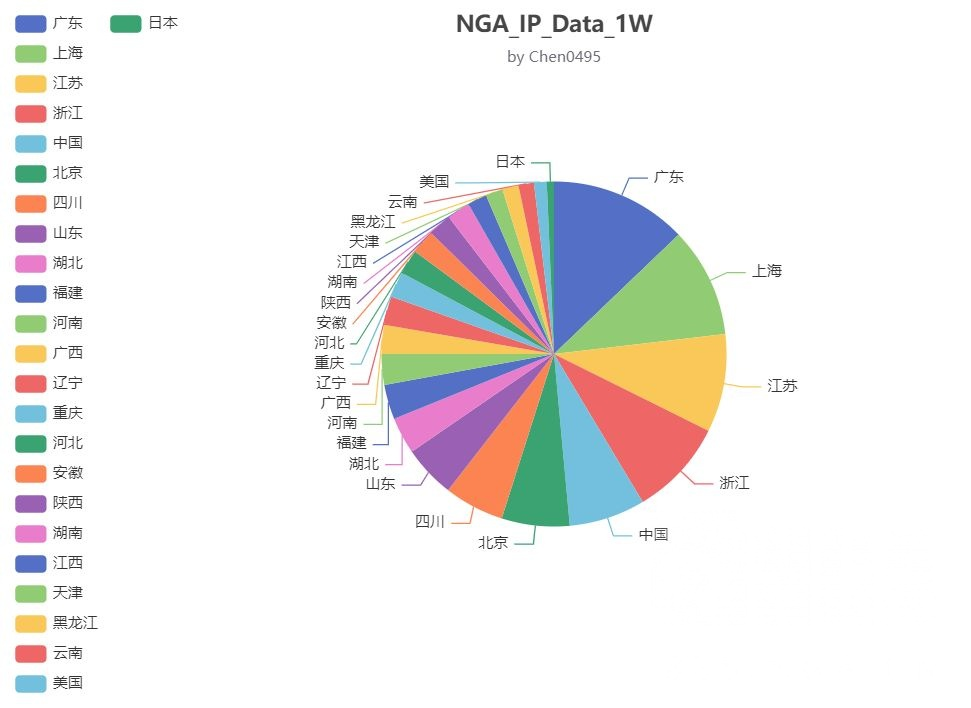

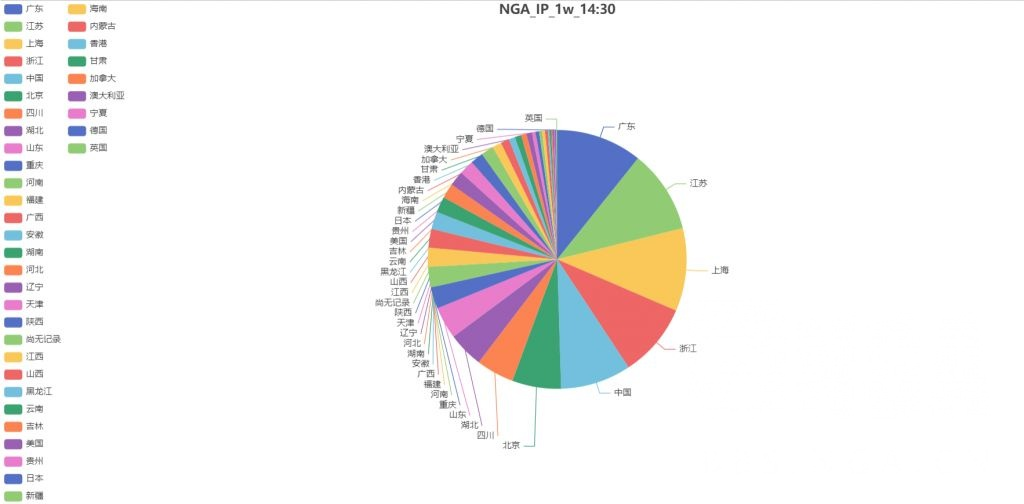

对结果进行相应处理,作图。